Medical Text Written By Artificial Intelligence Outperforms Doctors

(Posted on Friday, December 15, 2023)

This article was originally published on Forbes on 12/15/2023.

This story is part of a series on the current progression in Regenerative Medicine. This piece discusses advances in artificial intelligence.

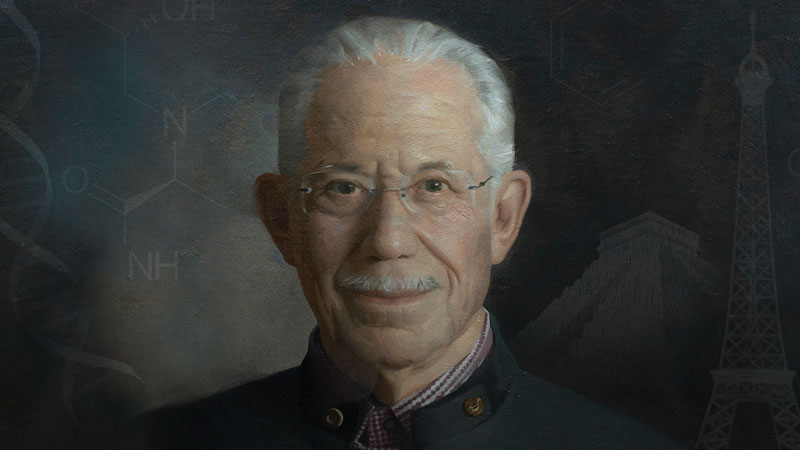

In 1999, I defined regenerative medicine as the collection of interventions that restore to normal function tissues and organs that have been damaged by disease, injured by trauma, or worn by time. I include a full spectrum of chemical, gene, and protein-based medicines, cell-based therapies, and biomechanical interventions that achieve that goal.

Can artificial intelligence answer your medical questions? In doing so, could AI lift some of the load from the shoulders of doctors and medical technicians?

Large language models, such as ChatGPT, create text by training on datasets and using statistics to create the most probable response to a user’s query. A major concern is that these models are not trained with medical use in mind. Most large language models use the whole internet as their dataset—in essence, a smarter and faster Google search.

Most argue that googling a question will not necessarily result in a correct answer, and the same concern can be applied to ChatGPT. However, were a large language model trained specifically with clinical healthcare datasets, the results could be far more accurate and trustworthy.

This was precisely the aim of a study published in Nature by Dr. Cheng Peng and colleagues at the University of Florida. Their program, appropriately named GatorTronGPT, answered user queries with roughly equivalent linguistic readability and clinical relevance to actual physicians.

Here, I will examine their findings and discuss the impact on the future of artificial intelligence in healthcare.

Rather than build an entirely new large language model from scratch, Peng and colleagues used the skeleton of ChatGPT-3. In other words, GatorTronGPT would learn like the large language models that came before it. However, rather than learning unrestricted datasets from all corners of humanity, GatorTronGPT was exclusively exposed to 82 billion words of de-identified clinical text and 195 billion word “Pile” dataset, a commonly used large language model dataset to help the program communicate effectively.

There were two main criteria for GatorTronGPT: linguistic readability and clinical relevance.

To evaluate the linguistic readability of GatorTronGPT, the researchers turned to natural language processing programs, which combine computational linguistics, machine learning, and deep learning models to process human language, very easily determining readability from absurdly large datasets.

The researchers compared the readability of 1, 5, 10, and 20 billion words of synthetic clinical text from GatorTronGPT versus 90 billion words of real text from the University of Florida medical archives.

They found that the synthetic text from GatorTronGPT was at least marginally (>1%) more readable than real-world text in the 1 billion words dataset in eight benchmarks. When the natural language processor is fed 5, 10, or 20 billion words, readability is roughly the same between GatorTronGPT and real-world text.

In other words, the specialized large language model is at least as readable as real medical text. It becomes harder to read as the model learns more words, which would make sense as the discussion becomes more specialized and nuanced.

As for clinical relevance, the researchers used what they refer to as the Physicians’ Turing test. The original Turing test, named for the mathematician Dr. Alan Turing, is a method of evaluating whether or not an individual can decipher whether the intelligent behavior of an entity they are communicating with is human or machine.

In the Physicians’ Turing test, two physicians were presented with 30 notes of medical text and 30 notes of synthetic text written by GatorTronGPT. Of the 30 synthetic notes, only nine (30.0%) and 13 (43.4%) were correctly identified as synthetic, meaning more than half the time, the physicians thought an AI-written note was human, passing the Turing test qualification of 30%.

Given GatorTronGPT’s improved medical aptitude over general large language models such as ChatGPT, it is likely that it or a similar model will be used in the coming months and years as an alternative to currently more available ChatGPT.

Such a system will certainly be used in the administrative aspects of the healthcare system, including analysis of clinical text, documentation of patient reports, scheduling and intake, etc.

Even with the improved GatorTronGPT, there will likely still be hesitation to use large language models as an alternative to physicians or technicians. There will need to be continued large scale research on the validity and accuracy of these systems. While the Turing test presented in the above study is notable, it must be repeated hundreds of times rather than with just two physicians.

There will be concerns about racial or sexual biases, the distribution of human versus robotic healthcare to the rich or poor, and questions about the intricacies of personal healthcare being handled by a model based on massive datasets.

Ultimately, including artificial intelligence in healthcare seems inevitable, so we must attempt to make it as accurate and trustworthy as possible to ensure its application is well received when it becomes a mainstream avenue.