The Ethics Of Brain-Machine Interfaces: Concerns And Considerations

(Posted on Friday, May 10, 2024)

This article was originally published on Forbes on 5/10/24.

This story is part of a series on the current progression in Regenerative Medicine. This piece discusses advances in brain-machine interfaces.

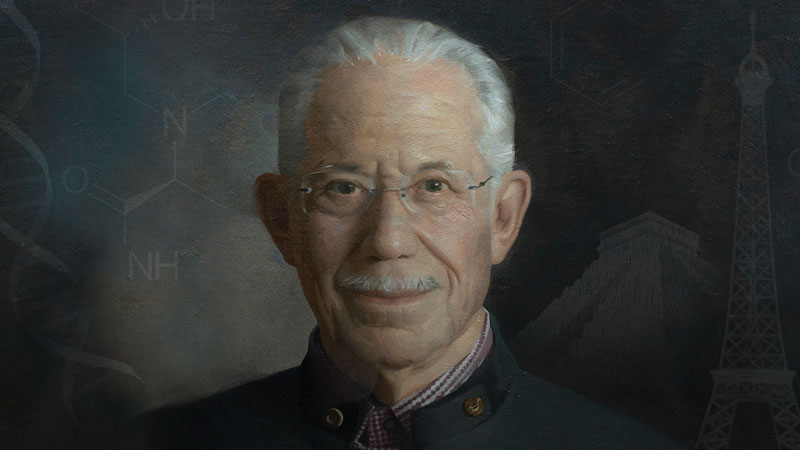

In 1999, I defined regenerative medicine as the collection of interventions that restore normal function to tissues and organs damaged by disease, injured by trauma, or worn by time. I include a full spectrum of chemical, gene, and protein-based medicines, cell-based therapies, and biomechanical interventions that achieve that goal.

As we approach a new age of technological integration, questions about privacy and ethics surrounding certain technologies will emerge. For several months, I have discussed the rapidly advancing field of brain-machine interfaces. The burgeoning technology allows for integration between our minds and external technological devices, theoretically enabling a more accessible world for those who could benefit from such technologies.

However, many of these devices rely on a direct or indirect electrical connection to the brain. The natural implication is to raise a few questions. Is my brain data being stored and shared outside my knowledge? Will my brain data be used against me in some future situation? Or more dystopic? How do I know my thoughts are my own if I am connected to a computer?

The study of brain-machine interface ethics will emerge soon after the technology itself becomes prevalent, similar to how sociologists now study the impacts of social media, smartphone usage, and so on. We must vocalize these concerns directly and be prepared for these conversations when they arise rather than ignoring them until they become a problem.

There are at least four areas of concern regarding brain-machine interface ethics, but there are likely many more.

First is the sensitive nature of the data collected by brain-machine interfaces. These gadgets can access a person’s innermost thoughts, emotional state, and mental health. Due to the sensitive nature of this personal information, it must be transmitted and handled cautiously to avoid the possibility of unauthorized access or misuse.

Many major corporations have been found to gather and store personal data without express permission from their product’s users. It seems likely such a situation could occur in a future where brain-machine interfaces are widely adopted, though the personal data could be much more intimate.

Notably, the state of Colorado has taken a significant step by introducing privacy regulations on the usage of commercial neurotechnology devices.

Second is the potential for discrimination and exploitation. Brain-machine interface data could reveal information about a person’s truthfulness, psychological traits, and attitudes, leading to workplace discrimination or other unethical uses.

Imagine entering a job interview fully prepared and confident in your odds. Still, the employer reveals they did a background search, which showed personal information gathered through your brain-machine interface device. Privacy quickly dissipates when your private thoughts are no longer private.

Third, there are concerns about hacking and external control risks. The risk of brain-machine interfaces being hacked and controlled by malicious actors is increased due to wireless communication. If this were to happen, it could result in the extraction of private information or manipulation of the device that could harm the user.

In the United States alone, there are over 800,000 cyberattacks per year. Typically, hackers will break into private accounts such as emails, gain access to more sensitive information like bank passwords, and extort the individual or steal their money. Similar attacks could take place with brain-machine interfaces.

Fourth is the need for more legal protections. While existing laws like HIPAA and GDPR offer some privacy safeguards, it is still being determined if they will sufficiently address the unique privacy challenges posed by the volume and sensitivity of data generated by advanced brain-machine interfaces. It will be necessary to update these laws to include specific workplace and personal protections for when brain-machine interface data leaks inevitably occur.

These are questions we will have to address sooner rather than later. These technologies are becoming increasingly advanced in the United States, and they may quickly reach the public market on a wide scale on the global stage. Recent reports suggest that the Chinese government is working with biotech companies in the country to rapidly advance the production of brain-machine interfaces to integrate cognitive enhancement into its massive population.

The report notes that China is more interested in noninvasive brain-machine interfaces than invasive versions, which may be a reasonable guide to mitigate some of the potential ethical issues.

Wearable technologies could be outfitted with more hardware and software protections than an implant directly fitted to the skull. While implants create more robust brain data, wearable technology rapidly advances to catch up. For instance, ultrasound and electrography technologies are viable candidates for precise brain imaging.

You will be able to divide brain-machine interface users into two main groups: those with severe disorders or physical disabilities that will require invasive brain-machine interfaces and those without who will likely use noninvasive versions.

We implore those who develop these machines to ensure both groups are as protected as possible from potential ethical exploitation. It is imperative that the general public trust that their personal information is just that: personal for the long-term adoption of these technologies.