A Golden Opportunity: Using Gold Contacts to Correct Color Blindness

(Posted on Monday, December 18, 2023)

This story is part of a series on the current progression in Regenerative Medicine. This piece is part of a series dedicated to the eye and improvements in restoring vision.

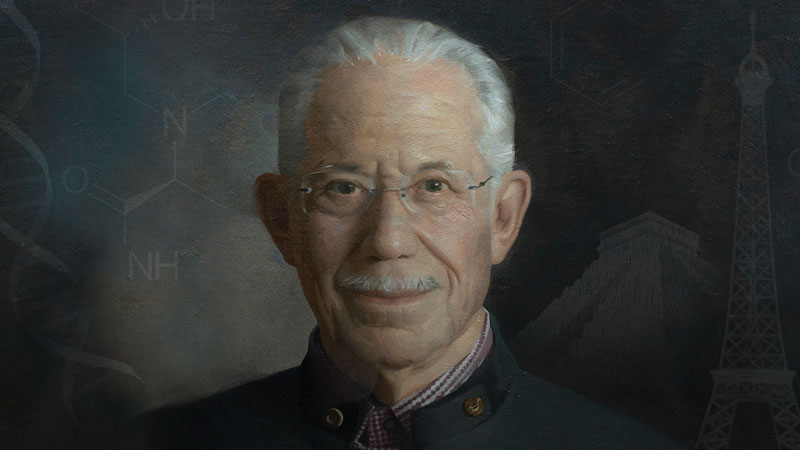

In 1999, I defined regenerative medicine as the collection of interventions that restore tissues and organs damaged by disease, injured by trauma, or worn by time to normal function. I include a full spectrum of chemical, gene, and protein-based medicines, cell-based therapies, and biomechanical interventions that achieve that goal.

Color is an integral part of our daily lives, from the vibrant hues of nature to the vivid visuals of art and media. However, not everyone can fully appreciate the spectrum of colors surrounding us. Color blindness, also known as color vision deficiency (CVD), affects millions worldwide, hindering their ability to distinguish between specific colors.

It is a condition where individuals have difficulty distinguishing between specific colors. This occurs due to a genetic mutation that affects the proteins responsible for detecting color in the retina’s cone cells. CVD can affect the range of activities and occupations that patients can engage in and can limit their ability to perform specific tasks that involve color differentiation.

Fortunately, modern technology offers a solution in the form of color-correcting contact lenses, with gold contacts being a promising new development that offers hope for those seeking a more natural and effective treatment.

Tinted Lenses To Correct Color Vision Deficiency

Tinted glass lenses are a wearable device that can help manage CVD difficulties. They are also the most commonly used corrective device for color deficiencies. The lenses work by selectively filtering out specific wavelengths of light, enhancing color contrast, and improving perception. By doing so, the lenses enable individuals to distinguish between previously indistinguishable colors.

One well-known company that provides tinted glasses for CVD is Enchroma. While these lenses are effective, they are not a permanent solution. Additionally, they can be inconvenient since they must be worn regularly to maintain effectiveness. Despite these limitations, tinted glasses remain a popular option for individuals with CVD who want to improve their color perception.

Contact Lenses for Color Blindness

Recent technological advances have led to the use of contact lenses that incorporate special filters. These filters selectively block specific wavelengths of light, enhancing the ability of individuals with CVD to differentiate between colors. The filters can be customized to each individual’s needs, allowing for a more personalized approach to correcting CVD.

Compared to tinted glasses, contact lenses are more comfortable and less obtrusive. They provide a more natural viewing experience since they sit directly on the eye rather than in front of it. Additionally, contact lenses can be worn during sports or other physical activities where glasses might be less practical.

However, not all contacts are created equal regarding CVD treatment, and one novel type is gaining popularity over the others.

A New Golden Standard of CVD Treatment?

Gold is highly suitable for making contact lenses because it is biocompatible and has excellent optical properties. Researchers have used these properties to create gold nanocomposite contact lenses that incorporate gold nanoparticles into the contact lens matrix. These gold nanoparticles are engineered to selectively filter out specific wavelengths of light, which can help improve color differentiation and perception in individuals with color vision deficiency (CVD).

A recent study was published in ACS Nano to investigate the efficacy of these gold nanocomposite contact lenses in treating CVD. The study recruited participants with CVD to wear gold contact lenses and complete various color-related tasks. The study showed that the gold contact lenses significantly improved color discrimination in individuals with mild to moderate CVD. Furthermore, the contact lenses were well-tolerated and did not produce any adverse effects.

(a) transmission spectra of the nanocomposites; (b) solutions before polymerization (scale: 10 mm); (c) steps in polymerizing the solutions and obtaining the lenses; (d) polymerized lenses at different nanoparticle concentrations (scale: 10 mm).

While the results of this study are promising, some limitations need to be addressed. Firstly, the study’s sample size was relatively small. Therefore, further research with a larger sample size is necessary to ensure the effectiveness of gold contacts. Additionally, the study only focused on individuals with mild to moderate CVD, and it remains to be seen if these contacts will be effective in individuals with severe CVD.

Despite these limitations, gold nanocomposite contact lenses represent an exciting development in CVD correction. With further research and development, these lenses could provide a more natural and permanent solution for color blindness.

The Future is Here and In Color

Furthermore, this research opens the possibility of developing other nanocomposite materials to correct visual impairments. For example, these materials could create contact lenses that correct visual acuity or sharpness. The potential applications of nanocomposite materials in the field of ophthalmology are vast and exciting, and it will be fascinating to see how the area develops in the future.