Under Pressure: Advances in Haptic Textiles for Sensory Feedback

(Posted on Tuesday, October 10, 2023)

Rice University

This story was originally published on Forbes on 10/10/23.

This story is part of a series on the current progression in Regenerative Medicine. This piece discusses advances in the use of haptic textiles for sensory feedback.

In 1999, I defined regenerative medicine as the collection of interventions that restore to normal function tissues and organs that have been damaged by disease, injured by trauma, or worn by time. I include a full spectrum of chemical, gene, and protein-based medicines, cell-based therapies, and biomechanical interventions that achieve that goal.

New haptic textile systems could revolutionize how we interact with our environment, not only medically but recreationally as well. Haptic technologies involve the recreation of the sense of touch through a wearable device and computer system. These technologies may be used for the generation of virtual objects within computer simulations, the manipulation of virtual objects, or telerobotics, to name a few use cases. Haptic devices may integrate tactile sensors that measure forces applied by the user on the interface or exerted on the user by an external device.

Haptic technology in regenerative medicine is a more recent and exciting development. One such example includes medical simulations, in which students may simulate surgery with haptic responses simulating the feel of bodily tissues. Haptics may also be used by amputee victims to improve the function of prosthetic limbs. I have written previously about the promise of haptic systems, such as the development of artificial skin.

Recently, a new version of haptic technologies has been unveiled—a fluidically programmed wearable textile. I have also written about smart fabrics in the past, but the wearable textiles described by Barclay Jumet and colleagues from Rice University in Cell are different in that they are specifically designed for user applications. Here, I will discuss their invention and contemplate how it may be used in the near future.

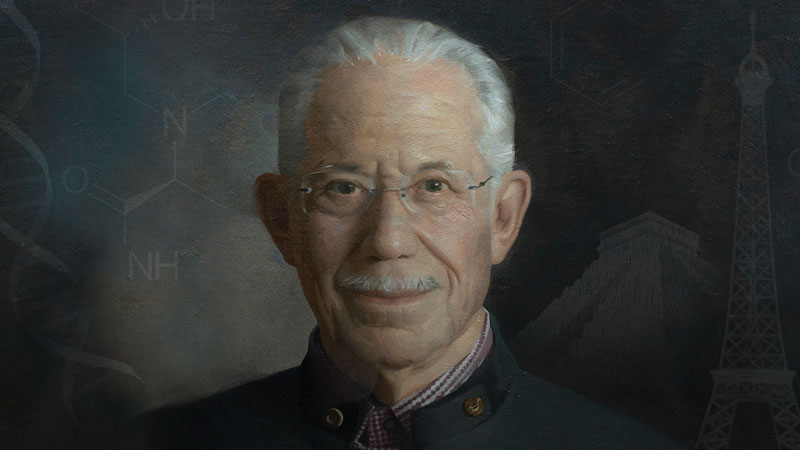

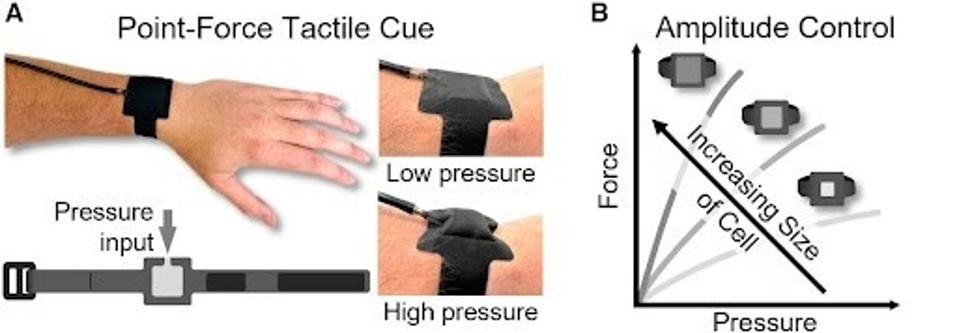

What makes the Jumet smart textiles unique is their use of fluidic programming. Absent a more complex conversation about computer programming, fluidic programming allows for a streamlined electrical system without the need for many of the parts required for traditional programming, allowing such a system to be easily installed in small flexible spaces, such as an armband.

The textiles involve an air pressure input and output pocket. These air pockets can inflated and deflated to apply differing amounts of pressure to the user in varying amounts of time, enabling a computer to communicate with the user via the differing pressure.

FIGURE 1: The schematized concept of wearable haptic textiles.

The fabric is designed in a multi-layer system, including a thermoplastic polyurethane to prevent overheating, the inflatable cells, and the electrical systems. The armband is then connected to belt-worn components, which include the battery and air pressure CO2 supply.

FIGURE 2: Haptic armband system in use with belt attachments.

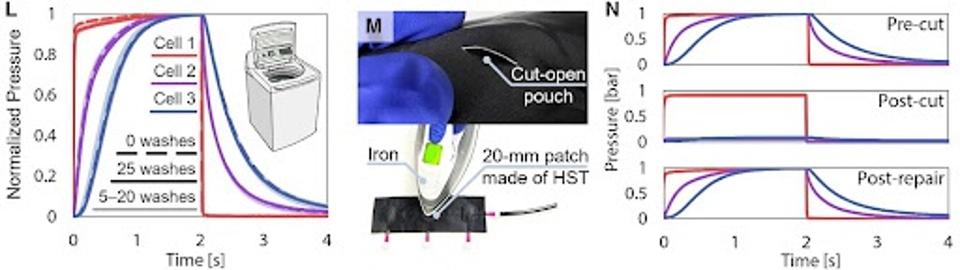

Not only is the system lightweight and relatively unrestrictive compared to other haptic feedback systems and wearable technologies in general, but it is also incredibly durable. In a durability test, the haptic armband remained consistent in pressure and accuracy through 25 washes in a typical home washing machine, tested after every five washes.

In another test, the air pocket was intentionally cut open with a knife. As opposed to requiring some expensive repair, the researchers simply ironed a small patch over the cut, an inexpensive and instant fix. Pre-cut and post-repair pressure tests were identical, indicating the armband could be repaired at home without expert intervention required.

FIGURE 3: At-home washing and repair simulations were successful in retaining full function.

To test the accuracy of the air pocket system, the researchers remotely directed a user to walk along shapes in a field using only the air pockets as a guide, with pressure in the front indicating forward, pressure on the left band indicating a left turn, and so on. An opaque film was placed over the arms to reduce visual hints for the user, and headphones were applied to reduce audio feedback.

Between fourteen participants, the average accuracy was 87.3%. Accounting for the most error was the difference between forward and backward, which included pressure on both arms, but in slightly different positions. Overall, the researchers found that 87.3% was more than a satisfactory outcome.

They conducted a similar test with a single user on an electric scooter, using the pressure feedback as directional signals to get from point A to point B. While the experiment included only one user, they interpreted pressure cues with 100% accuracy.

Perhaps among the most notable aspects of the system is cost. While wearable technologies can sometimes run thousands or tens of thousands of dollars, the researchers anticipate an eventual retail price of under $300, given the simplicity of the fluidic electrical system and the inexpensive materials involved.

Such a system could be used in all manner of fields. With respect to regenerative medicine, it could be used by elderly people as a walking assistant or reminder technology. With respect to recreational uses, I could see implementations for virtual reality gaming, enhanced remote control of machines or devices, and so on.

Another exciting aspect of Jumet’s haptic system is its preparedness for widespread use as compared to many other wearable technologies. Particularly when involving artificial intelligence or robotic assistance, wearable technology is still years away, as extensive safety and efficacy tests must take place. Jumet’s haptic armband is a relatively simple and accessible device that could be released sooner rather than later.

While uses for the fluidic armband will need continuous research and development, the device itself seems primed for widespread use. I highly anticipate its release and reception.